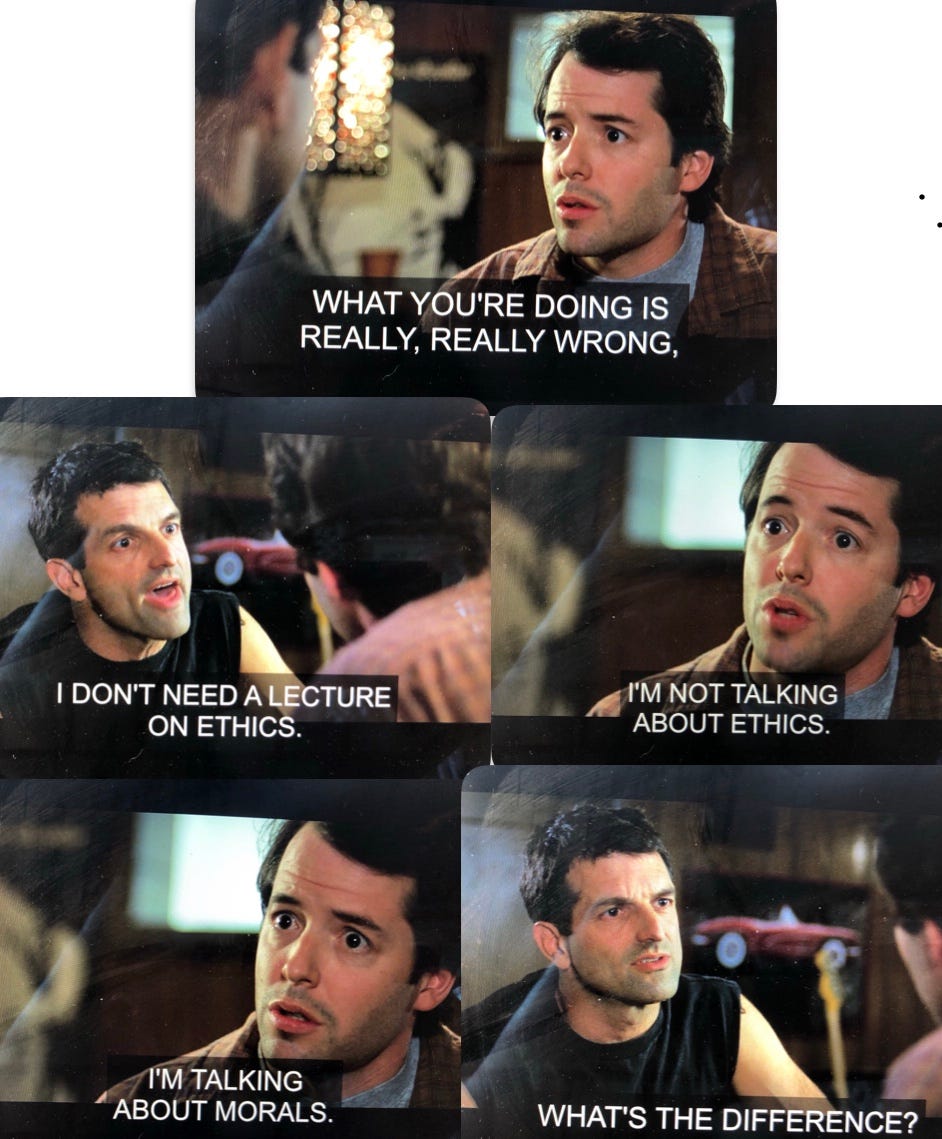

Whenever I hear someone talking about ethics, I immediately think of the scene from “Election” where Matthew Broderick, playing a high school teacher, asks the class to explain ethics vs. morals.

None of the students quite get it right, but it shows how confusing and daunting conversations about ethics can be.

Ethics is something we think of as being reserved for a classroom discussion. In reality, ethical decisions and moral reasoning often rule our daily lives (“Election” illustrates all this ethics business beautifully and comically, making it a true classic and a must-watch if you haven’t seen it).

Ethical concerns about AI have been around for a long time, but it’s only been in the past few years that these conversations have “gone mainstream”, as the Harvard Business Review puts it. Now that AI has infiltrated our daily lives, we must deal with all the ethical ramifications. Like the plot of “Election”, using AI is not a simple case of right vs. wrong.

What do people mean when they talk about ethics and AI?

Concerns about AI fall into a few broad ethical questions:

1. What are tech companies actually allowed to do with AI?

This ethics question focuses on the builders/makers of AI. What types of data should tech companies be allowed to use when building LLMs? Is it ‘stealing’ if OpenAI used articles available online from the New York Times to train ChatGPT? Who is responsible or at fault if an AI model goes rogue and engages in unethical or illegal activity “on its own”?

2. How do we avoid, reduce, or mitigate bias when using AI?

Bias is a huge ethical challenge for AI. Bias in AI is not hard to find. If you ask any GenAI tool to generate an image of a doctor, that person will most likely be a white man. Back in 2018, Amazon revealed that it had disbanded the top-secret AI recruiting tool it was developing because it “did not like women” and had taught itself that male candidates were preferable. There’s a lot of bias in using AI because the data sets used to build things like LLMs are often biased, and it’s not easy to collect data that accurately represents the human population.

3. What role should the government play in controlling and monitoring AI?

In the U.S., the government is notoriously slow when it comes to legislating anything that tech companies are doing (well, except for trying to ban TikTok). AI is no exception. However, some people in the government have been Preparing for the Future of Artificial Intelligence and thinking about how AI will impact public outreach, regulation, governance, security and the economy. For example, self-driving cars use AI, so new regulations are now being proposed to minimize risk (for both drivers and auto manufacturers).

4. Is anything really private in the age of AI?

Privacy is another big ethical concern when talking about AI. Researchers at Stanford argue that because GenAI uses data about people scrapped from the internet, it can memorize personal information, which can then enable phishing scams that target people for identity theft and fraud. We’re now living in a world where it’s basically impossible for people using online products or services to escape systematic digital surveillance, and AI will only make our lack of privacy even worse.

5. Is it possible to use artificial intelligence to build a more “responsible” AI system?

Responsible AI is an emerging concept that tech companies like to put on their About page to give people the impression that their AI systems are being designed and used responsibly. I think the reality of tech companies using AI is a little more complicated than this, but several key players in the AI race, like Amazon, have been pushing for clear rules and guidelines on responsible AI practices in an effort to manage risk.

6. What does intellectual property have to do with AI and ethics?

With the advent of GenAI, we’re now being tasked to answer the question: who owns AI-generated content? The person who created it? The GenAI tool that they used? What if someone inputs another person’s art or likeness into a tool like Midjourney and asks it to create something similar—who owns it now? Is that even allowed?

In the next edition of Prompt Me, I’ll be diving deeper into these questions with the help of someone who’s an expert on all things IP and AI: Anuj Gupta, an attorney and managing partner at First Gen Law. Gupta counsels tech and media companies on complex intellectual property matters.

Look out for part two of my primer on ethics and AI to hit your inbox in the coming weeks :) In the meantime, you can watch “Election” and realize that you still only have a high school-level understanding of morals and ethics.

A crucial aspect of AI that needs to be explored. Thank you for writing this article. A very eye-opening read! Awareness is key to stay safe. Thank you, Rosanna.